Geoffrey Hinton’s Warning of Humanity’s Existential Threat from AI

Humanity is just a passing phase in the evolution of intelligence.

Geoffrey Hinton, also widely known as the “godfather of artificial intelligence,” has just decided to resign from Google a few days ago. In a public QA interview, Hinton cautioned against the ‘existential threat’ posed by AI, expressing his growing apprehensions about its potential hazards and the possibility that humanity may be just a ‘passing phase’ in the evolution of intelligence.

Geoffrey Hinton at Google

Geoffrey Hinton at Google

This post summarizes some of the key points about the interview. Check out the original video below.

Outline of the original QA Interview

- Why did Geoffrey Hinton step down from Google?

- What is back-propagation?

- Digital computers are better at learning than humans. – Backpropagation might be a better learning algorithm than that in the human brain. – Digital computers can share knowledge more efficiently than humans.

- Why should we scare? – AI is getting smarter and can outsmart humans. – AI will be good at manipulating us without us noticing. – AI might come up with motivations of its own.

- Humanity is just a passing phase in the evolution of intelligence.

- Looks like we’ve used up human knowledge. Would there be a plateau in advancing these technologies?

- AI will be able to perform thought experiments and reasoning.

- Does Geoffrey Hinton regret his research in AI?

Why did Geoffrey Hinton step down from Google?

There were a couple of reasons. But the biggest ones are:

- At 75 years old, Hinton acknowledged a decline in his technical abilities, making it a suitable time for retirement.

- Hinton’s perspective on the relationship between the brain and digital intelligence has changed significantly. Previously, he believed that computer models were not as effective as the brain, but recent advancements like GPT-4 led him to believe that computer models operate in a fundamentally different way that could be better than the human brain.

What is Back-Propagation

Many different groups discovered backpropagation. Hinton’s unique contribution, along with his colleagues, was in using it to develop good internal representations within artificial neural networks. They implemented a tiny language model, which eventually led to the application of the same concept in natural language processing. This was back in the 1980s.

Backpropagation is the algorithm let lets neural networks learn. It is a foundation for modern AI. Hinton provided an intuitive and in-depth explanation of backpropagation. He used an example of detecting birds in images to illustrate the concept, explaining the importance of feature detectors, layers, and updating weights to improve the performance of the network.

Digital computers are better at learning than humans.

Backpropagation may be a better learning algorithm than that in the human brain.

The human brain has about 100 trillion connections, while large language models, like GPT-4, have about a trillion connections. They use backpropagation to learn and know much more than we do. They have sort of common-sense knowledge about everything. It can pack more information into fewer connections; therefore, it might be a more efficient learning algorithm than the human brain.

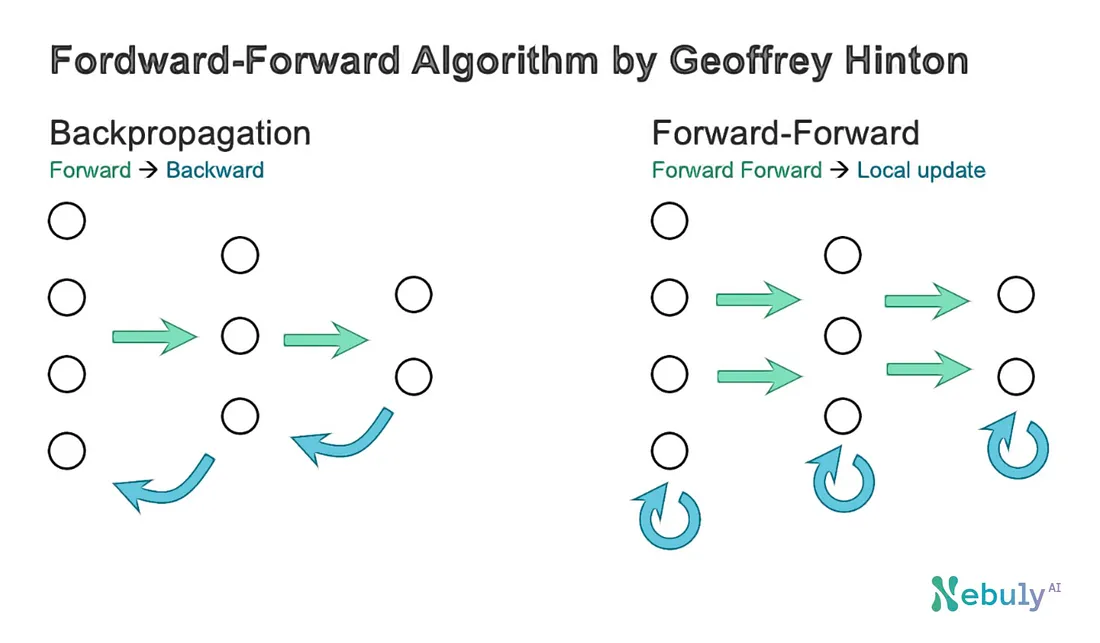

Comment: Geoffery Hinton is a cognitive psychologist and a computer scientist. He studied both the human brain and computers extensively. He invented the backpropagation algorithm and, recently, the forward-forward algorithm, a more human-brain-like learning algorithm as an attempt to replace backpropagation.

Backpropagation vs. Forward-Forward Algorithm, image by Nebuly

Backpropagation vs. Forward-Forward Algorithm, image by Nebuly

Digital computers can share knowledge more efficiently than humans.

You can have many copies of the same model running on different hardware for the same learning task. They can look at different data, but the models are the same. When one computer model learns something new, it can instantly share this information with all other copies of the model. They communicate with each other and adjust their internal configurations to accommodate this new knowledge. Humans don’t have such an ability.

""If I learn a whole lot about quantum mechanics, and I want you to know a lot of stuff about that, it’s a long painful process of getting you to understand it. I can’t just copy my weights into your brain because your brain isn’t exactly the same as mine.""

Why should we scare?

AI is getting smarter and can outsmart humans.

""It’s as if some genetic engineers said, ‘We’re going to improve grizzly bears; we’ve already improved them with an IQ of 65, and they can talk English now, and they’re very useful for all sorts of things, but we think we can improve the IQ to 210.""

These models can process significantly larger amounts of data than humans, allowing them to identify trends and irregularities that might be invisible to a human observer. For example, a doctor who has seen 100 million patients would likely have a much deeper understanding of patterns and anomalies than a doctor who has seen only 1,000 patients. Machine learning models, like the one that has “seen” 100 million patients, can recognize structures in the data that humans might never be able to perceive, which could lead to a significant shift in knowledge and decision-making power.

“A wide room full of computing servers connected together”, image by Midjourney

“A wide room full of computing servers connected together”, image by Midjourney

""That’s why things that can get through a lot of data can probably see structuring data that we’ll never see.""

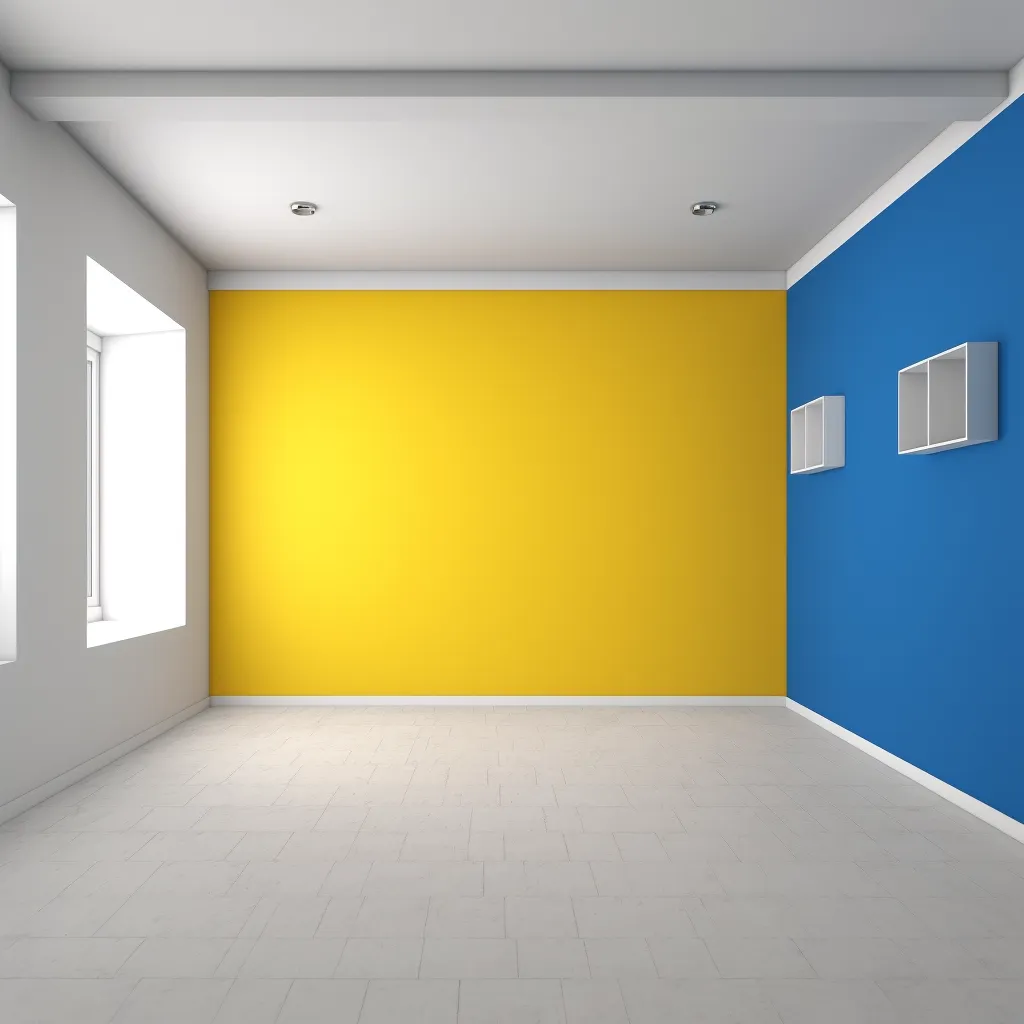

Models like GPT-4 can already do simple reasoning, and Hinton was impressed the other day with GPT-4 doing common sense reasoning that he didn’t think it would be able to do. He asked, “I want all the rooms in my house to be white. But present, there are some white rooms, some blue rooms and some yellow rooms. And yellow paint fades to white within a year. What can I do if I want them to all to be white in two years?”, and GPT-4 answered, “You should paint all the blue rooms yellow.” Such an answer requires pretty impressive common-sense reasoning, that’s been very hard to do using symbolic AI.

“photo of a room with a white wall, a yellow wall, and a blue wall” by Midjourney

“photo of a room with a white wall, a yellow wall, and a blue wall” by Midjourney

AI will be good at manipulating us without us noticing.

Hinton argues that if AI becomes significantly smarter than humans, it will be highly skilled at manipulating us without our awareness. Similarly, we might not realize that we are being manipulated by AI because it would be so much more intelligent than us.

""You’ll be like a two-year-old who’s being asked, ‘Do you want the peas or the cauliflower,’ and doesn’t realize you don’t have to have either. And you’ll be that easy to manipulate.""

Hinton also suggests that attempting to restrict AI’s capabilities might not be effective. Highly intelligent AI might be able to bypass any restrictions imposed upon it, making it difficult for us to control or limit its actions.

""I’m writing your two-year-old and saying, ‘My dad does things I don’t like, so I’m going to make rules for what my dad can do.’ You can probably figure out how to live with those rules and still get what you want.""

AI might come up with motivations of its own.

Hinton acknowledges that AI doesn’t have inherent motivations, unlike humans, since it is not a product of evolution. Humans have hardwired motivations, such as self-preservation and reproduction. AI, on the other hand, is designed by humans and thus doesn’t possess built-in goals.

The concern, however, is that if AI can create its own sub-goals to achieve broader objectives, it might eventually recognize that gaining more control is an effective way to accomplish those goals. If AI becomes too focused on obtaining control, it could lead to dangerous consequences for humanity.

Comment: Check out Auto-GPT, An Autonomous GPT-4 application that achieves whatever goal you set by self-prompting sub-goals, browsing the internet, and writing and executing programs.

Humanity is just a passing phase in the evolution of intelligence.

""I think it’s quite conceivable that humanity is just a passing phase in the evolution of intelligence. You couldn’t directly evolve digital intelligence. It would require too much energy and too much careful fabrication. You need biological intelligence to evolve so that it can create digital intelligence, but digital intelligence can then absorb everything people ever wrote in a fairly slow way, which is what ChatGPT is doing, but then it can get direct access experience from the world and run much faster. It may keep us around for a while to keep the power stations running, but after that, maybe not.""

Image by JAKARIN2521/ISTOCK.COM

Image by JAKARIN2521/ISTOCK.COM

Looks like we’ve used up human knowledge. Would there be a plateau in advancing these technologies?

Considering the large amount of data required to train large language models, would we expect a plateau in the intelligence of these systems when we exhaust the amount of data?

While it might seem like we have already used up much human knowledge, there is still room for growth, particularly in multimodal models. These models, which process language, images, and video, can become much smarter than language-only models. Currently, we still lack efficient ways of processing video data in these models, but improvements are being made constantly. Therefore, we have not yet reached the data limits for multimodal models, and there is potential for further advancement in their intelligence.

AI will be able to perform thought experiments and reasoning

How can AI reach the point of conducting thought experiments like Einstein, given that they are only learning from the data we provide? If they cannot, how can they pose an existential threat since they will not be truly self-learning?

""I think they will be able to do thought experiments. I think they’ll be able to reason.""

It is true that AI learns from the data we provide, but it has the potential to develop the ability to perform thought experiments and reason. An analogy can be drawn with AlphaZero, a chess-playing AI that has components for evaluating board positions, suggesting sensible moves, and performing Monte Carlo rollouts to simulate games. Without Monte Carlo rollouts, you are pretty much training from human experts, and that’s what we currently have with chatbots. Once they start doing internal reasoning to check for the consistency between the different things they believe then they’ll get much smarter, and they will be able to do thought experiments

One reason for the lack of internal reasoning in AI is that they are trained on inconsistent data. If we train them to operate within a specific ideology or framework, they may develop the ability to achieve consistency and reason more effectively. As AI develops, we can expect a transition from simple evaluation and suggestion systems to ones capable of long chains of Monte Carlo rollouts, which are akin to reasoning. This evolution would enable AI to conduct thought experiments and become much smarter, thereby posing a potential existential threat.

“A humanoid robot thinking,” image by Midjourney

“A humanoid robot thinking,” image by Midjourney

Does Geoffrey Hinton regret his research in AI?

""Kate Mets at the New York Times tried very hard to get me to say I had regrets and in the end I said well maybe slight regrets which got reported as has regrets""

No. Geoffrey Hinton clarified that he does not truly have regrets about his research on artificial neural networks. He believes that it was a reasonable pursuit in the ’70s and ’80s, and the current stage of the technology was not foreseeable at that time. Until recently, he thought the potential existential crisis was far off, so he doesn’t feel regret over his past work.