Something-of-Thoughts in LLM Prompting: An Overview of Structured LLM Reasoning

Chain-of-Thought (CoT), Tree-of-Thoughts (ToT), Graph-of-Thoughts (GoT), and beyond, … What are these thoughts?

“Tree of Thoughts”, generated by Midjourney

“Tree of Thoughts”, generated by Midjourney

In the age of smartphones and smart homes, imagine an AI that doesn’t merely follow instructions, but actually thinks, grappling with complex logic just as we do. Sounds like science fiction, doesn’t it? However, if you’ve played around with ChatGPT, you’ve likely witnessed this astonishing capability firsthand. Even Hector Levesque, a renowned figure in AI reasoning, was so astounded that he once commented to AI legend Geoffrey Hinton: “How can such a stupid method (referring to neural networks) can deal with reasoning?”

While this story underscores the monumental advances in AI, the true essence of these advancements is found in the intricate dance of Large Language Models (LLMs) with reasoning. The entry point to this dance is Prompt Engineering — the art and science of optimizing the textual input provided to LLMs to elicit desired outputs. At its core, it’s about understanding the intricacies of how language models like ChatGPT, Bard, Claude, LLama, and others respond to different prompts, and then leveraging this knowledge to achieve specific results.

Think of LLMs as vast knowledge reservoirs. The way you phrase your question or statement (the prompt) determines how you tap into that reservoir. Just as humans might offer different answers based on how a question is posed, LLMs too can give varied responses based on the input.

In this article, you’ll receive a concise overview of various prompt engineering frameworks designed to enhance LLM reasoning, including:

- Chain-of-Thought

- Chain-of-Thought-Self-Consistency

- Tree-of-Thoughts

- Graph-of-Thoughts

- Algorithm-of-Thoughts

- Skeleton-of-Thought

- Program-of-Thoughts

Chain-of-Thought (CoT)

Instead of directly outputting an answer, provide the language model with intermediate reasoning examples to guide its response.

Chain-of-Thought (CoT) prompting has been recognized as one of the pioneering and most impactful prompt engineering techniques, enhancing the decision-making processes in large language models. Distinct from conventional prompting methodologies that emphasize direct input-output interactions, CoT compels a model to segment its reasoning into intermediary steps. This method draws parallels to human cognitive processes wherein intricate challenges are segmented into smaller, more manageable components.

To illustrate, consider a mathematical problem: “Roger possesses 5 tennis balls and subsequently purchases 2 cans of tennis balls, with each can containing 3 balls. How many tennis balls does he possess now?”. Rather than directly deducing the answer as 11, an individual might rationalize: “Initially, Roger has 5 balls. The combined total of 2 cans, each containing 3 balls, amounts to 6 balls. Summing the values, 5 + 6, yields 11.” Integrating such step-by-step analytical reasoning into the input prompt not only augments the accuracy of the model’s response but also accomplishes this without necessitating additional training datasets or alterations to the fundamental model configuration.

Chain-of-Thought Prompting, source: Wei et al. (2022)

Chain-of-Thought-Self-Consistency (CoT-SC)

Construct multiple chains of thought, evaluate each one, and ultimately select the most effective and coherent chain.

A subsequent advancement from the Chain of Thought framework is CoT-Self-consistency. This method instigates multiple concurrent reasoning pathways in response to a query and applies weighting mechanisms prior to finalizing an answer. This approach resembles ensemble techniques observed in traditional machine learning but is applied to thought sequences in large language models.

Tree-of-Thoughts (ToT)

Expand on the chains of thought in a tree format. This allows for backtracking, exploring multiple branches of reasoning stemming from a single root idea.

Tree-of-Thoughts (ToT) offers a more structured prompting framework for LLM reasoning by breaking down complex problems into more manageable parts. Unlike the CoT which reasons in a linked chain, ToT organizes its problem-solving strategy in a tree format. Each node, referred to as a ‘thought,’ is a coherent language sequence serving as a step towards the final answer. By dividing problems into these discrete ‘thought’ units — from a brief series of words in a crossword puzzle to a component of a mathematical equation — ToT ensures that each phase of the problem is systematically addressed.

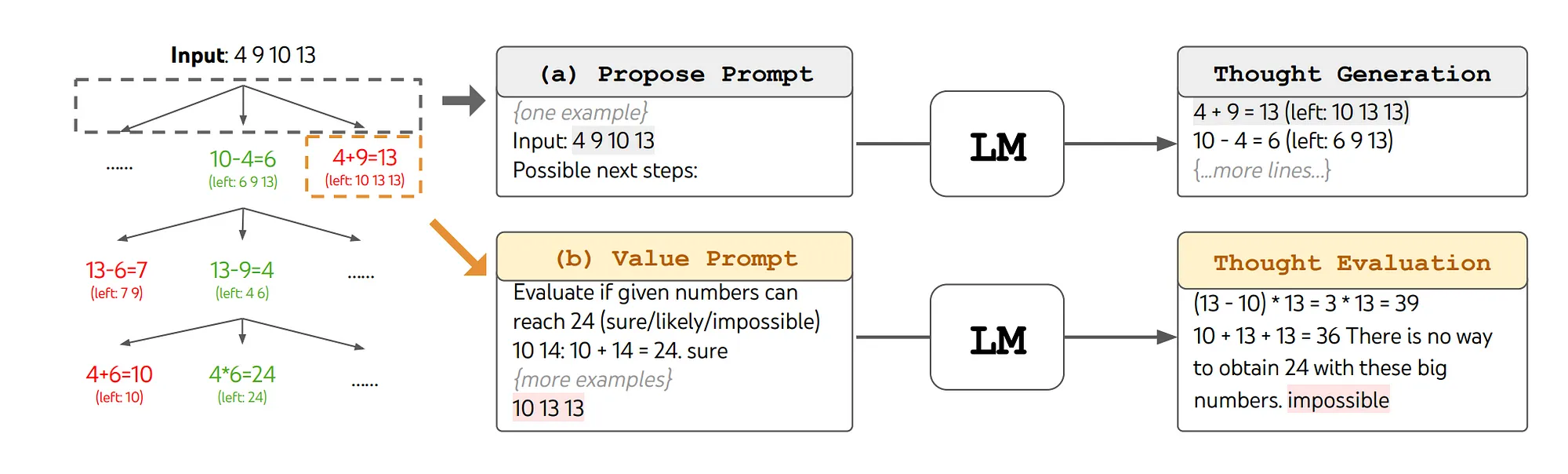

ToT reasoning in the game of 24, source: Yao et al. (2023)

ToT reasoning in the game of 24, source: Yao et al. (2023)

The operational strength of ToT lies in its methodical organization. First, the system breaks down a problem and, from its current state, generates a list of potential reasoning steps or ‘thought’ candidates. These thoughts are then evaluated, with the system gauging the likelihood that each one will lead to the desired solution. Standard search algorithms, such as Breadth-first search (BFS) and Depth-first search (DFS), are used to navigate this tree, aiding the model in identifying the most effective sequence of thoughts.

ToT’s importance stems from its holistic design, adaptability, and efficiency. The Chain-of-Thought prompting can be viewed as a specific instance within the ToT framework. Its modular nature indicates that individual components, from the initial breakdown of a problem to the search algorithms employed, can operate independently.

Graph-of-Thoughts (GoT)

Evolve the tree structure into Direct Acyclic Graphs. This introduces self-loops which can either solidify a particular line of thought or aggregate multiple thoughts into a cohesive one.

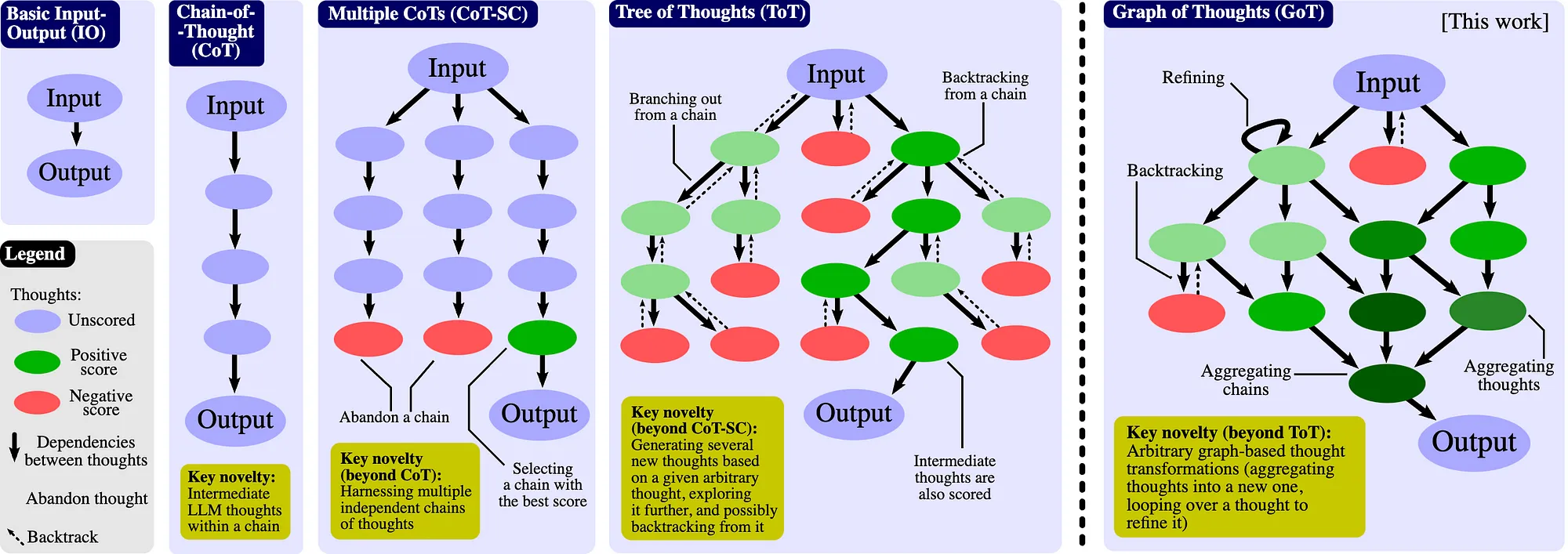

The Graph-of-Thoughts (GoT) framework represents an advanced progression from CoT and ToT methodologies. Central to the GoT framework is the conceptualization of ideas as vertices in a Directed Acyclic Graph (DAG). In this context, each vertex corresponds to a specific thought or solution — be it preliminary, intermediary, or terminal — elicited by an input stimulus. The directed edges within this graph depict the interdependency among these thoughts. Specifically, if an edge extends from thought t1 to t2, it signifies that t2 was conceived based on t1. This systematization permits a multiplicity of thoughts since nodes may be classified into distinct categories such as “plans” or “outcomes”.

Graph-of-Thoughts, source: Besta et al. (2023)

Graph-of-Thoughts, source: Besta et al. (2023)

GoT’s novelty lies in its ability to apply transformations to these thoughts, further refining the reasoning process. The cardinal transformations encompass Aggregation, which allows for the fusion of several thoughts into a consolidated idea; Refinement, where continual iterations are performed on a singular thought to improve its precision; and Generation, which facilitates the conception of novel thoughts stemming from extant ones. Such transformations, with an emphasis on the amalgamation of reasoning routes, deliver a more intricate viewpoint relative to preceding models like CoT or ToT.

Furthermore, GoT introduces an evaluative dimension through Scoring and Ranking. Each individual thought, represented by a vertex, undergoes an assessment based on its pertinence and quality, facilitated by a designated scoring function. Importantly, this function contemplates the entire chain of reasoning, assigning scores that might be contextualized vis-a-vis other vertices in the graph. The framework also equips the system with the competence to hierarchize these thoughts predicated on their respective scores, a feature that proves instrumental when discerning which ideas warrant precedence or implementation.

Algorithm-of-Thoughts (AoT)

Maintains a single evolving context chain, eliminating the need for redundant queries as in the Tree-of-Thought. It explores a mutable path of reasoning.

While ToT and GoT address the LLM reasoning challenge through search-based mechanisms, producing a myriad of reasoning paths in graph forms. However, their heavy reliance on numerous LLM queries, sometimes numbering in the hundreds for a singular problem, poses computational inefficiencies.

The Algorithm-of-Thoughts (AoT) offers an innovative method that features a dynamic and mutable reasoning path. By maintaining a single evolving thought context chain, AoT consolidates thought exploration, enhancing efficiency and reducing computational overhead.

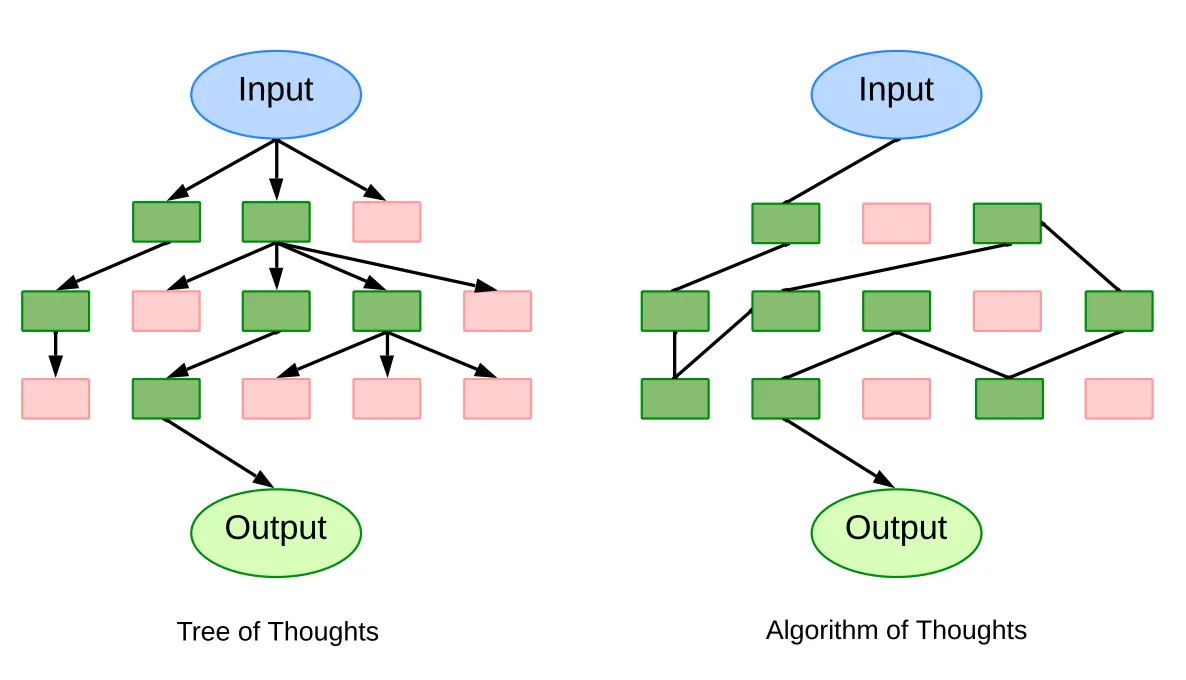

Algorithm-of-Thoughts. Each box signifies a distinct thought. Greens are promising thoughts while reds are less promising ones. Note that ToT has multiple queries while AoT maintains a single context, source: Sel et al.(2023)

Algorithm-of-Thoughts. Each box signifies a distinct thought. Greens are promising thoughts while reds are less promising ones. Note that ToT has multiple queries while AoT maintains a single context, source: Sel et al.(2023)

The ingenuity behind AoT springs from the observation that LLMs, although powerful, occasionally revert to prior solutions when faced with new yet familiar problems. To overcome this, AoT assimilates in-context examples, drawing from time-tested search algorithms such as depth-first search (DFS) and breadth-first search (BFS). By emulating algorithmic behavior, AoT underscores the importance of achieving successful outcomes and gleaning insights from unsuccessful attempts.

The cornerstone of AoT lies in its four main components: 1) Decomposing complex problems into digestible subproblems, considering both their interrelation and the ease with which they can be individually addressed; 2) Proposing coherent solutions for these subproblems in a continuous and uninterrupted manner; 3) Intuitively evaluating the viability of each solution or subproblem without relying on explicit external prompts; and 4) Determining the most promising paths to explore or backtrack to, based on in-context examples and algorithmic guidelines.

Skeleton-of-Thought (SoT)

Generate an answer blueprint first before parallelly fleshing out the details, reducing the time taken to generate a complete response.

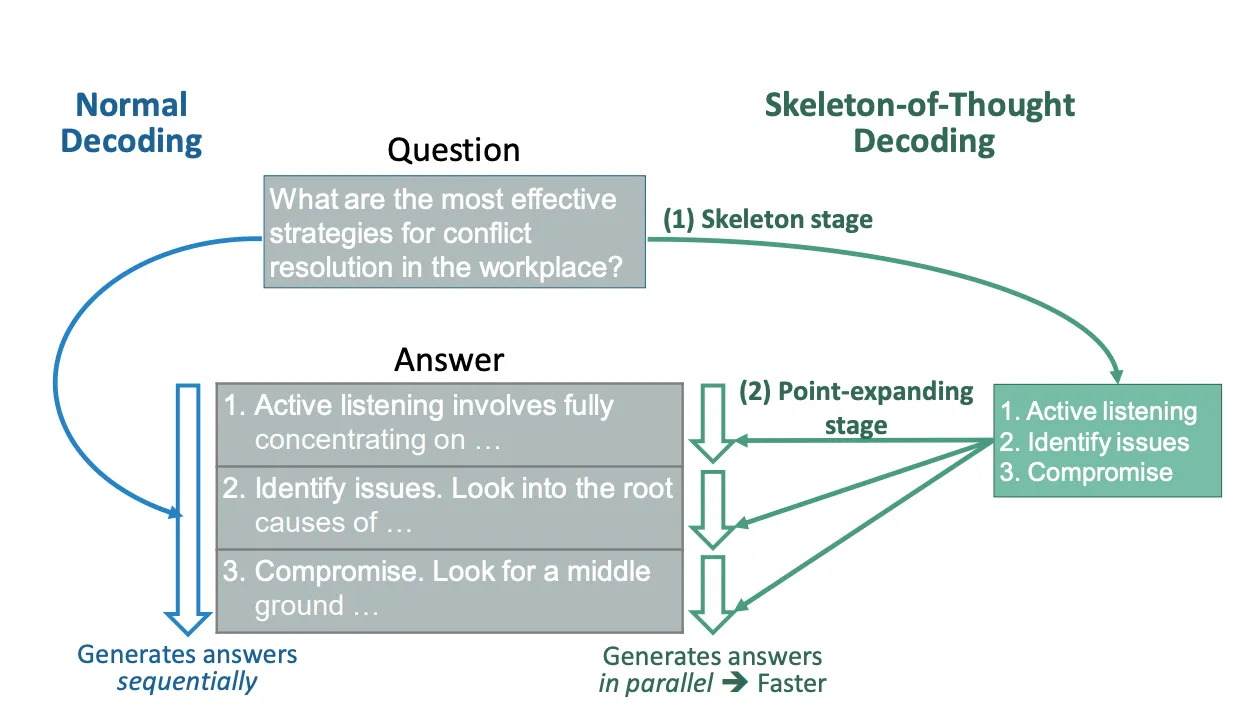

The Skeleton-of-Thought (SoT) paradigm is distinctively designed not primarily to augment the reasoning capabilities of Large Language Models (LLMs), but to address the pivotal challenge of minimizing end-to-end generation latency. The methodology operates based on a dual-stage approach that focuses on producing a preliminary blueprint of the answer, followed by its comprehensive expansion.

Skeleton-of-Thought, source: Ning et al. (2023)

Skeleton-of-Thought, source: Ning et al. (2023)

In the initial “Skeleton Stage,” rather than producing a comprehensive response, the model is prompted to generate a concise answer skeleton. This abbreviated representation prompted through a meticulously crafted skeleton template, captures the core elements of the prospective answer, thus establishing a foundation for the subsequent stage.

In the ensuing “Point-Expanding Stage,” the LLM systematically amplifies each component delineated in the answer skeleton. Leveraging a point-expanding prompt template, the model concurrently elaborates on each segment of the skeleton. This dichotomous approach, which separates the generative process into preliminary skeletal formulation and parallelized detailed expansion, not only accelerates response generation but also strives to uphold the coherence and precision of the outputs.

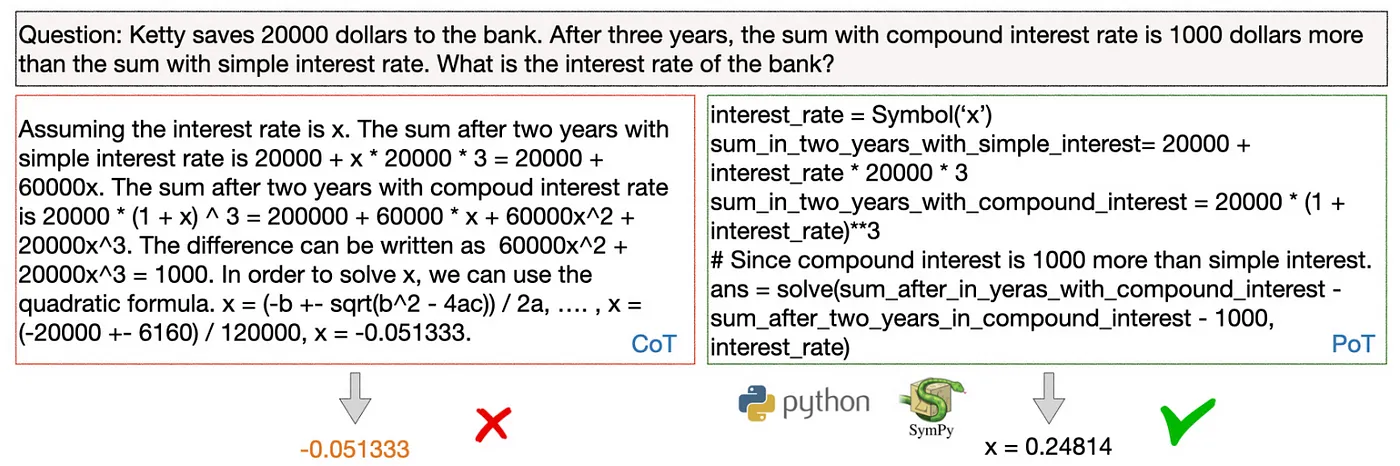

Program-of-Thoughts (PoT)

Formulate the reasoning behind question answering into an executable program, incorporated the program intepretor output as part of the final answer.

Program-of-Thoughts (PoT) is a unique approach to LLM reasoning, instead of merely generating an answer in natural language, PoT mandates the creation of an executable program, which means it can be run on a program interpreter, like Python, to produce tangible outcomes. This method stands in contrast to more direct models, emphasizing its ability to break down reasoning into sequential steps and associate semantic meanings with variables. As a result, PoT offers a clearer, more expressive, and grounded model of how answers are derived, enhancing accuracy and understanding, especially for math-type logical questions where numerical calculations are needed.

It is important to note that the program execution of PoT is not necessarily targeting the final answer but can be part of the intermediate step to the final answer.

Comparison between CoT and PoT, source: Chen et al.(2022)

Comparison between CoT and PoT, source: Chen et al.(2022)

In the ever-evolving realm of AI, structured reasoning frameworks like Chain-of-Thought have dramatically transformed how we perceive and harness the power of Large Language Models. They symbolize a shift towards models that not only regurgitate information but also engage in intricate reasoning, much akin to human cognitive processes. As we look ahead, the potential horizons seem limitless. Imagine an AI, adept at generating not only accurate answers but also robust, programmable solutions or having the ability to visualize its thought processes, making AI-human collaboration even more seamless. Such advancements, building upon the foundational frameworks explored in this article, herald a future where LLMs become indispensable companions in problem-solving, creativity, and decision-making, catalyzing a paradigm shift in our symbiotic relationship with technology.